k-Means Algorithm#

The EM algorithm for k-means has the following procedure:

Guess some initial cluster centres.

Repeat the following until convergence is reached:

E-step: Assign points to the nearest cluster centre.

M-step: Update the cluster centres with the mean position.

The E-step updates our expectation of which cluster each point belongs to, while the M-step maximises some fitness function that defines the cluster centre locations. Each repetition of the E-M loop will always result in a better estimate of the cluster characteristics.

Let’s write some code to perform this algorithm ourselves.

First, we need some data to cluster; for this, we will use the scikit-learn method to produce random blobs of data.

import numpy as np

import matplotlib.pyplot as plt

X = np.loadtxt('../data/kmeans.txt')

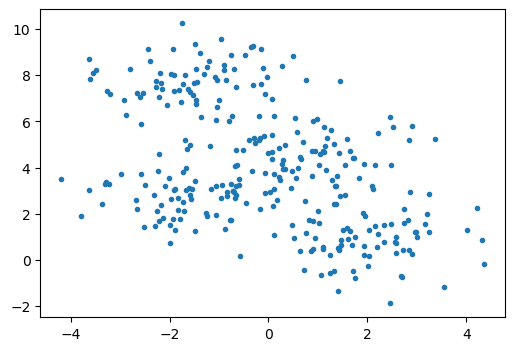

fig, ax = plt.subplots(figsize=(6, 4))

ax.plot(X[:, 0], X[:, 1], '.')

plt.show()

We will try to identify four clusters in this data. Therefore, we set the following.

n_clusters = 4

As a starting position, let’s select four of the points randomly.

import numpy as np

i = np.random.randint(0, X.shape[0], size=4)

centres = X[i]

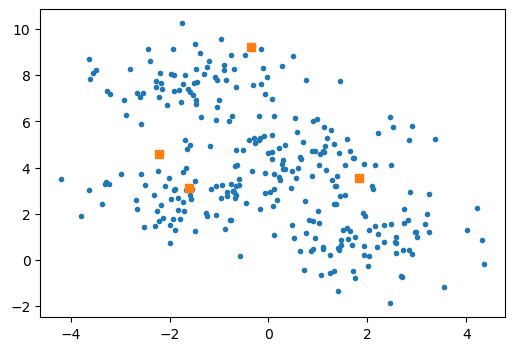

Our initial guess is shown below with orange squares.

fig, ax = plt.subplots(figsize=(6, 4))

ax.plot(X[:, 0], X[:, 1], '.')

ax.plot(centres[:, 0], centres[:, 1], 's')

plt.show()

It is clear that they do not represent the arithmetic mean of any data.

The second step (the first of the E-M loop) assigns all the points to their nearest cluster centre.

This can be achieved very efficiently by using NumPy and array broadcasting.

First, we compute the vector from each of the data, X, to each current cluster centre.

vectors = X[:, np.newaxis] - centres

vectors.shape

(300, 4, 2)

Notice that the shape of this array is (number_of_data, number_of_clusters, dimensions).

The magnitude of the vector can describe the distance from the data to the current centres; we want the magnitude along the dimensions axis of the array (the final axis, hence -1).

magnitudes = np.linalg.norm(vectors, axis=-1)

magnitudes.shape

(300, 4)

We now have the magnitude of the vector from each data point to each cluster centre.

We want to know which centre each datapoint is closest to; we can use the argmin() method of the NumPy array.

This returns the index of the minimum value (the method min() will return the actual value, but we aren’t interested in this).

The argmin() method should only be run on the number of clusters axis to end up with an array of 300 indices.

labels = magnitudes.argmin(axis=1)

print(labels)

[1 3 2 3 2 2 1 2 3 3 1 3 2 0 2 2 2 2 1 1 2 2 2 1 1 0 2 2 1 1 3 0 2 0 3 0 0

3 1 2 2 1 0 2 1 1 3 1 3 2 0 2 3 2 2 1 3 1 3 2 0 2 3 1 1 1 0 2 3 1 2 2 3 1

1 3 1 2 2 3 2 2 2 2 3 2 2 0 0 3 2 2 3 1 1 2 2 2 0 0 3 2 3 2 2 2 2 2 0 2 1

0 2 3 2 2 3 2 2 0 1 2 1 2 2 2 2 1 2 1 3 1 1 2 0 1 0 0 1 3 3 1 2 0 2 1 0 1

3 3 3 2 0 0 2 1 3 1 2 2 3 2 2 2 2 1 1 0 2 2 2 3 2 2 0 3 2 2 2 1 2 2 1 1 0

2 2 2 2 0 1 1 2 2 1 1 1 2 1 3 2 1 2 1 2 0 1 3 2 0 2 1 0 2 3 1 1 2 2 2 3 2

2 0 2 1 1 3 3 2 2 3 2 1 1 2 2 1 3 1 2 0 2 3 3 0 3 1 1 0 2 1 2 2 1 0 2 2 2

3 2 0 0 2 3 1 2 3 2 2 2 1 1 2 2 2 2 2 3 3 2 2 2 2 2 2 0 1 3 2 2 2 0 3 3 2

2 2 3 1]

This array, labels, assigns each data point to the closest cluster centres, completing the E-step in the E-M loop.

Now, we need to update the cluster centres with the new arithmetic mean of the assigned data.

The data can be split into groups using logical slicing i.e., to get all values with the label 0.

X[labels == 0]

array([[-2.8808903 , 6.26769229],

[-1.57671974, 4.95740592],

[-2.5961812 , 7.07388663],

[-1.05318608, 6.05389716],

[-3.20005988, 7.17556829],

[-2.52794495, 7.25002881],

[-0.73000011, 6.25456272],

[-2.23160429, 3.86114299],

[-2.92947399, 6.94648866],

[-3.2646624 , 7.29600538],

[ 0.06344785, 5.42080362],

[-2.58046743, 5.86683533],

[-0.19685333, 6.24740851],

[-4.18607625, 3.52420179],

[-1.35874518, 6.1747602 ],

[-1.66060557, 3.99562607],

[ 0.11504439, 6.21385228],

[-1.4677968 , 6.75142339],

[-2.97343871, 3.71818021],

[-2.65421869, 7.24540237],

[-1.68457103, 5.17460576],

[-1.47086146, 6.9223808 ],

[-0.17005847, 5.27627535],

[-0.27652528, 5.08127768],

[-0.29421492, 5.27318404],

[-1.18799989, 4.93892582],

[-0.51498751, 4.74317903],

[-2.05388228, 6.71714809],

[-1.64064477, 4.78931696],

[-0.79447386, 6.0057196 ],

[-0.65392827, 4.76656958],

[-3.2827946 , 3.36881672],

[-0.18887976, 5.20461381],

[-2.20876016, 7.04234265],

[-0.38565976, 5.17984092],

[-2.21091491, 4.57743306],

[-0.09448254, 5.35823905],

[-2.58153248, 3.7381301 ],

[-1.76577482, 6.85663016],

[-1.03477573, 6.62688636]])

Above are all the datapoints associated with the first cluster centre, and the mean (along the datapoint axis can be found as).

X[labels == 0].mean(axis=0)

array([-1.60965388, 5.6484172 ])

And it is possible to perform this for all four clusters with simple list comprehension.

new_centres = np.array([X[labels == i].mean(axis=0) for i in range(n_clusters)])

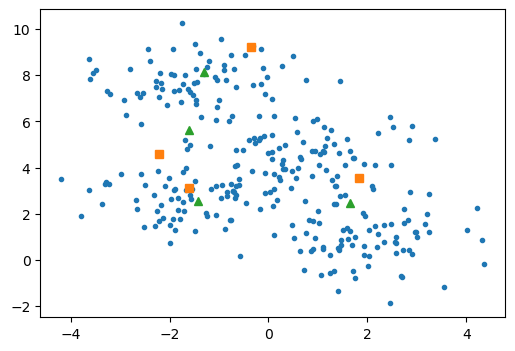

The new centres can be visualised below (this time with triangles).

fig, ax = plt.subplots(figsize=(6, 4))

ax.plot(X[:, 0], X[:, 1], '.')

ax.plot(centres[:, 0], centres[:, 1], 's')

ax.plot(new_centres[:, 0], new_centres[:, 1], '^')

plt.show()

Finally, we overwrite the centres object to update the centres.

centres = new_centres

This process should be completed until the centres do not change so we can use an iterative approach. See the code cell below, which loops over this process until there is no change in the centres.

from sklearn.datasets import make_blobs

import numpy as np

X, y_true = make_blobs(n_samples=300, centers=4, cluster_std=1, random_state=1)

n_clusters = 4

i = np.random.randint(0, X.shape[0], size=n_clusters)

centres = X[i]

diff = np.inf

while np.sum(diff) > 0.00000001:

vectors = X[:, np.newaxis] - centres

magnitudes = np.linalg.norm(vectors, axis=-1)

labels = magnitudes.argmin(axis=1)

new_centres = np.array([X[labels == i].mean(axis=0) for i in range(n_clusters)])

diff = np.abs(centres - new_centres)

centres = new_centres

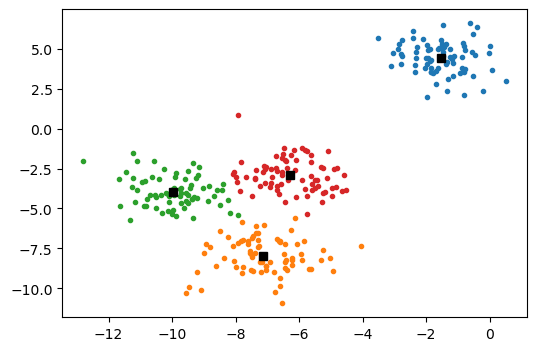

We show the results below, with the different clusters identified by colour and the cluster centres marked with a black square.

from sklearn.cluster import KMeans

fig, ax = plt.subplots(figsize=(6, 4))

for i in range(n_clusters):

ax.plot(X[labels == i][:, 0], X[labels == i][:, 1], '.')

ax.plot(centres[:, 0], centres[:, 1], 'ks')

plt.show()

There are some important issues that one should be aware of in using the simple E-M algorithm discussed above:

The optimal result may never be achieved globally. As with all optimisation routines, although the result is improving, it may not be moving to the globally optimal solution.

k-means is limited to linear cluster boundaries. The fact that k-means finds samples as close as possible in cartesian space means that the clustering cannot have more complex geometries.

k-means can be slow for a large number of samples. Each iteration must access every point in the dataset (and in our implementation, it accesses each point

n_clustersnumber of times)!The number of clusters must be selected beforehand. We must have some a priori knowledge about our dataset to apply a k-means clustering effectively.

This final point is a common concern in k-means clustering and other clustering algorithms. Therefore, let’s look at a popular tool used to find the “correct” number of clusters.