Convolutional Neural Networks#

The convolutional neural network has become extremely popular for the classification of images, though it can be applied to non-image data. The convolutional neural network is built on the convolutional layer. This layer applied a set of filters, kernels, to the data, helping it extract meaningful patterns such as edges, textures and shapes.

AlexNet

AlexNet is probably the most famous convolutional neural network. AlexNet was developed by Alex Krizhevsky (hence the name) with Ilya Sutskever (Co-founder of OpenAI) and Geoffrey Hinton (Nobel Prize winner in Physics 2024) in 2012. The AlexNet model had 60 000 000 parameters with 650 000 neurons.

Many see AlexNet as a step change in image classification. In the ImageNet Large Scale Visual Recognition Challenge in 2012, it significantly outperformed the competition. This excellent performance was achieved using graphical processing units (GPUs) for training.

Problems with Convolutional Neural Networks#

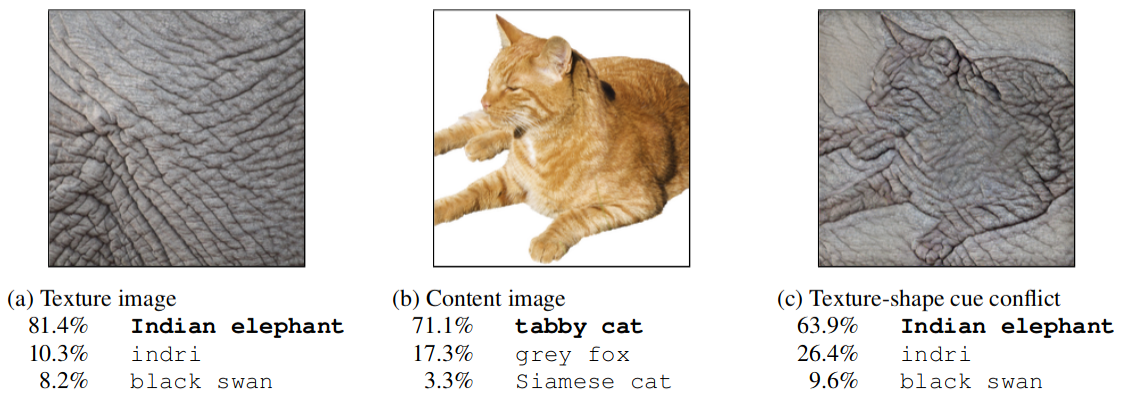

It is highlighted above that convolutional neural networks can extract meaningful patterns such as edges, textures, and shapes. However, this can lead to some problems; for example, consider the images in Fig Fig. 38. Here, we have the texture of an Indian elephant, an image of a tabby cat and the texture of an Indian elephant with the outline (edges) of a tabby cat.

Fig. 38 Classification of a standard ResNet-50 of (a) elephant skin: only texture cues; (b) a normal image of a cat; and (c) an image with a texture-shape cue conflict generated by style transfer between the first two images. Reproduced from arXiv:1811.12231.#

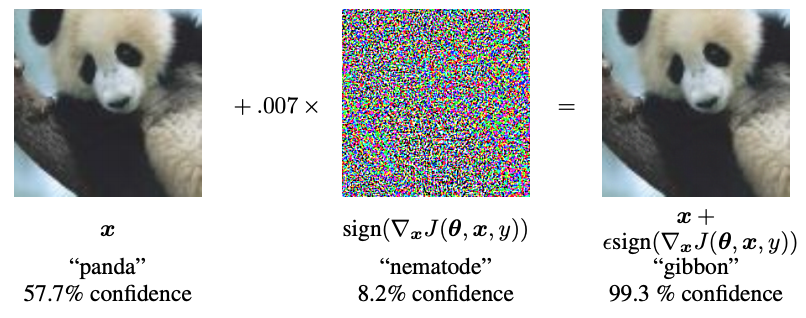

The first two images are correctly classified by the standard ResNet-50 (a convolutional neural network), but the final one appears to have focused on texture instead of the edges. This indicates texture bias in the ResNet-50 network. Similar problems have been observed when small amounts of noise are added to the image.

Fig. 39 An example where adding a small amount of apparently random noise to an image leads to strong misclassification. Reproduced from arXiv:1412.6572.#

Another important observation of bias in image recognition is that various facial recognition systems perform worst for minority classes, with an accuracy difference of up to 34 % between lighter-skinned males and darker-skinned females. This is often due to the limitations in the training data, leading to biases in the resulting classifications. However, these biases may have major repercussions now that such systems can be used in areas such as law enforcement and employment screening. For more on this work, read about the Gender Shades project.

Further Reading

Given the popularity of machine learning approaches, including convolutional neural networks, a broader range of online materials can be used to supplement your understanding. I recommend the following CNN Explainer webpages.