Special Topics in Artificial Intelligence and Deep Learning#

Special Topics in Artificial Intelligence and Deep Learning is an advanced course designed for master’s-level students seeking to deepen their understanding of cutting-edge developments in AI and deep learning. This course builds upon foundational knowledge of machine learning. It explores specialised topics, such as generative models, reinforcement learning, interpretability in AI, and applications in fields like natural language processing, computer vision, and bioinformatics.

Students will engage with the theoretical underpinnings, implementation details, and real-world challenges of modern AI systems, gaining hands-on experience with state-of-the-art tools and frameworks. The course emphasises critical thinking, enabling students to evaluate current research and understand emerging trends in the rapidly evolving field of AI.

Whether preparing for a career in AI research or industry, this course provides the skills and insights needed to tackle complex problems and contribute to advancing artificial intelligence.

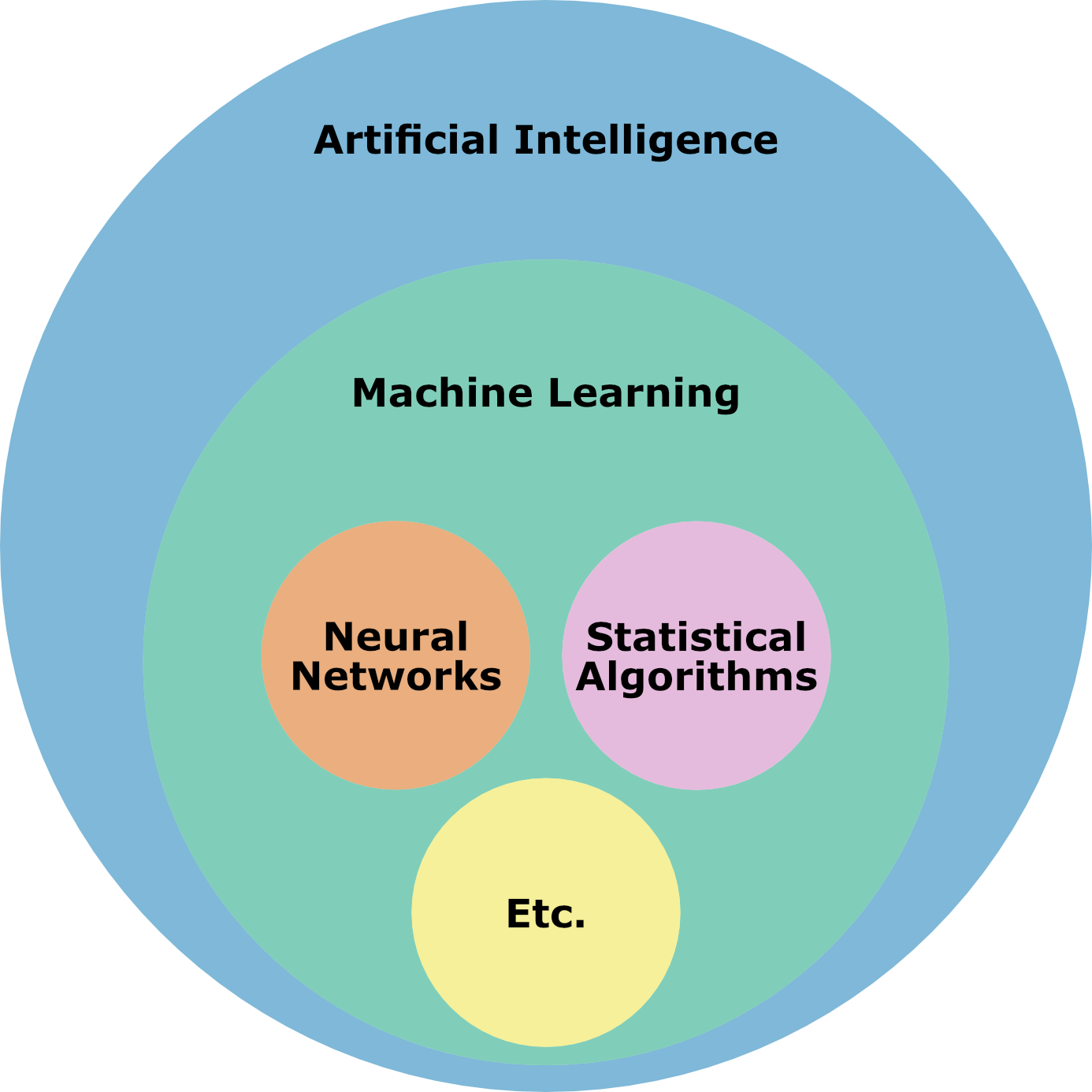

Fig. 1 A Venn diagram showing the overlap of artificial intelligence, machine learning, and underlying statistical methods.#

Contributors#

Andrew R. McCluskey (Bristol)

Harry Richardson (Bristol)

Bibliography#

A. Rahman. Correlations in the motion of atoms in liquid argon. Phys. Rev., 136(2A):A405–A411, 1964. doi:10.1103/physrev.136.a405.

J. H. Ward. Hierarchical grouping to optimize an objective function. J. Am. Stat. Assoc., 58(301):236–244, 1963. doi:10.1080/01621459.1963.10500845.

Ricardo J. G. B. Campello, Davoud Moulavi, and Joerg Sander. Density-Based Clustering Based on Hierarchical Density Estimates, pages 160–172. Springer Berlin Heidelberg, 2013. doi:10.1007/978-3-642-37456-2_14.

P. Monk and L. J. Munro. Maths for Chemistry: A chemist’s toolkit of calculations. Oxford University Press, London, UK, 2010.

N. Metropolis, A. W. Rosenbluth, M. N. Rosenbluth, A. H. Teller, and E. Teller. Equation of state calculations by fast computing machines. J. Chem. Phys., 21(6):1087–1092, 1953. doi:10.1063/1.1699114.

W. K. Hastings. Monte carlo sampling methods using markov chains and their applications. Biometrika, 57(1):97–109, 1970. doi:10.1093/biomet/57.1.97.

M. D. Hoffman and A. Gelman. The no-u-turn sampler: adaptively setting path lengths in hamiltonian monte carlo. J. Mach. Learn. Res., 15(47):1593–1623, 2014. URL: http://jmlr.org/papers/v15/hoffman14a.html.

T. Bayes. An Essay Towards Solving a Problem in the Doctrine of Chances. Phil. Trans. R. Soc., 53:370–418, 1763. doi:10.1098/rstl.1763.0053.

J. Mayer, K. Khairy, and J. Howard. Drawing an elephant with four complex parameters. Am. J. Phys., 78(6):648–649, 2010. doi:10.1119/1.3254017.

R. E. Kass and A. E. Raftery. Bayes factors. J. Am. Stat. Ass., 90(430):773–795, 1995. doi:10.1080/01621459.1995.10476572.

J. Skilling. Nested sampling. In AIP Conference Proceedings, volume 735, 395–405. AIP, 2004. doi:10.1063/1.1835238.

W. S. McCulloch and W. Pitts. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biol., 5(4):115–133, 1943. doi:10.1007/bf02478259.

M. Minsky and S. Papert. Perceptrons: An Introduction to Computational Geometry. The MIT Press, Cambridge, USA, 1969.

Disclaimer#

We track the usage of this webpage with Google Analytics. The purpose of this tracking is to understand how this material is used not who is using it.