Recurrent Neural Networks#

The neural networks we have investigated are sometimes called feedforward neural networks. These types of neural networks can be limited as the inputs are all processed independently by the network. The independence is addressed with recurrent neural networks, where the output of a neuron for a given data point is fed in as an input for the following data point. This makes recurrent neural networks uniquely suited for modelling sequential data, such as text, speech and time series. As a result, recurrent neural networks have significantly influenced the transformer networks that have led to the revolutionary large language models.

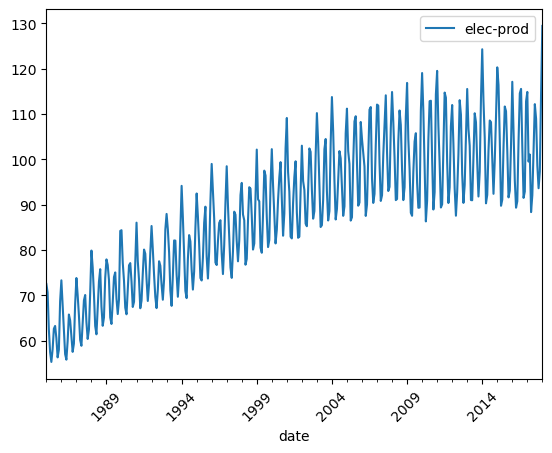

We will use a simple time series dataset for the electrical production to put together a simple recurrent neural network. You can download the dataset here.

import pandas as pd

import matplotlib.pyplot as plt

timeseries = pd.read_csv('../data/electric.csv')

timeseries['date'] = pd.to_datetime(timeseries['date'])

fig, ax = plt.subplots()

timeseries.plot(x='date', y='elec-prod', ax=ax)

ax.tick_params(axis='x', labelrotation=45)

plt.show()

Feedback#

As mentioned above, the difference between a traditional neural network and a recurrent neural network is that the latter includes the hidden state of the previous data point in the activation function. That means that for some activation function, \(f\), the traditional network as the equation,

while for a recurrent network, we add the previous hidden state,

We implement a simple feedback perceptron with Python below, using a tanh activation function.

import numpy as np

class SimpleRNNPerceptron:

"""

A simple RNN perceptron with a single hidden layer.

:param input_size: The size of the input vector

:param hidden_size: The size of the hidden layer

"""

def __init__(self, input_size, hidden_size):

self.hidden_size = hidden_size

self.W_x = np.random.randn(hidden_size, input_size) * 0.1

self.W_h = np.random.randn(hidden_size, hidden_size) * 0.1

self.b = np.zeros((hidden_size, 1))

self.h_i = np.zeros((hidden_size, 1))

def step(self, x_i):

"""

Processes a single step

:param x_i: The input vector

:return: The output vector

"""

x_i = x_i.reshape(-1, 1)

self.h_i = np.tanh(np.dot(self.W_x, x_i) + np.dot(self.W_h, self.h_i) + self.b)

return self.h_i

rnn = SimpleRNNPerceptron(input_size=1, hidden_size=5)

We can run this through a single year of our time series data, where the hidden size is 5. The hidden size is the number of neurons in the hidden layer.

timeseries_2017 = timeseries['elec-prod'][timeseries['date'].dt.year == 2017]

timeseries_2017 /= timeseries_2017.max()

for i, x_i in enumerate(timeseries_2017):

h_i = rnn.step(np.array([x_i]))

print(f"Step {i+1}: Hidden State = {h_i.ravel()}")

Step 1: Hidden State = [ 0.01817811 -0.03460612 0.25239101 0.09089611 0.07643179]

Step 2: Hidden State = [-0.02408365 -0.07772213 0.23904544 0.08753984 0.08339 ]

Step 3: Hidden State = [-0.02206769 -0.0762449 0.23829721 0.07873544 0.08808943]

Step 4: Hidden State = [-0.02086852 -0.07161701 0.210359 0.06916788 0.08140937]

Step 5: Hidden State = [-0.01535771 -0.06734796 0.21629502 0.07146435 0.08227063]

Step 6: Hidden State = [-0.01462962 -0.07142449 0.23857348 0.08071167 0.08885546]

Step 7: Hidden State = [-0.01690913 -0.07872003 0.26136567 0.08911467 0.09687761]

Step 8: Hidden State = [-0.02103426 -0.08211194 0.25604964 0.08645091 0.09672695]

Step 9: Hidden State = [-0.02156553 -0.07800667 0.23349806 0.07727998 0.09014666]

Step 10: Hidden State = [-0.01845623 -0.07215846 0.22108521 0.07281175 0.08540913]

Step 11: Hidden State = [-0.01588816 -0.07087819 0.22836957 0.07621947 0.08660469]

Step 12: Hidden State = [-0.01455829 -0.07750016 0.26582148 0.09063649 0.09818259]

The larger the hidden size, the more capacity the network has to learn complex patterns, which comes with a computational cost.

Implementation with pytorch#

The Python library pytorch comes with an implementation of recurrent neural networks.

We advise you to study the documentation to understand how a recurrent neural network may be implemented using pytorch.

Furthermore, you should appreciate the configurability of this nn.Module object.